WITNESS and the Facebook Trump Suspension

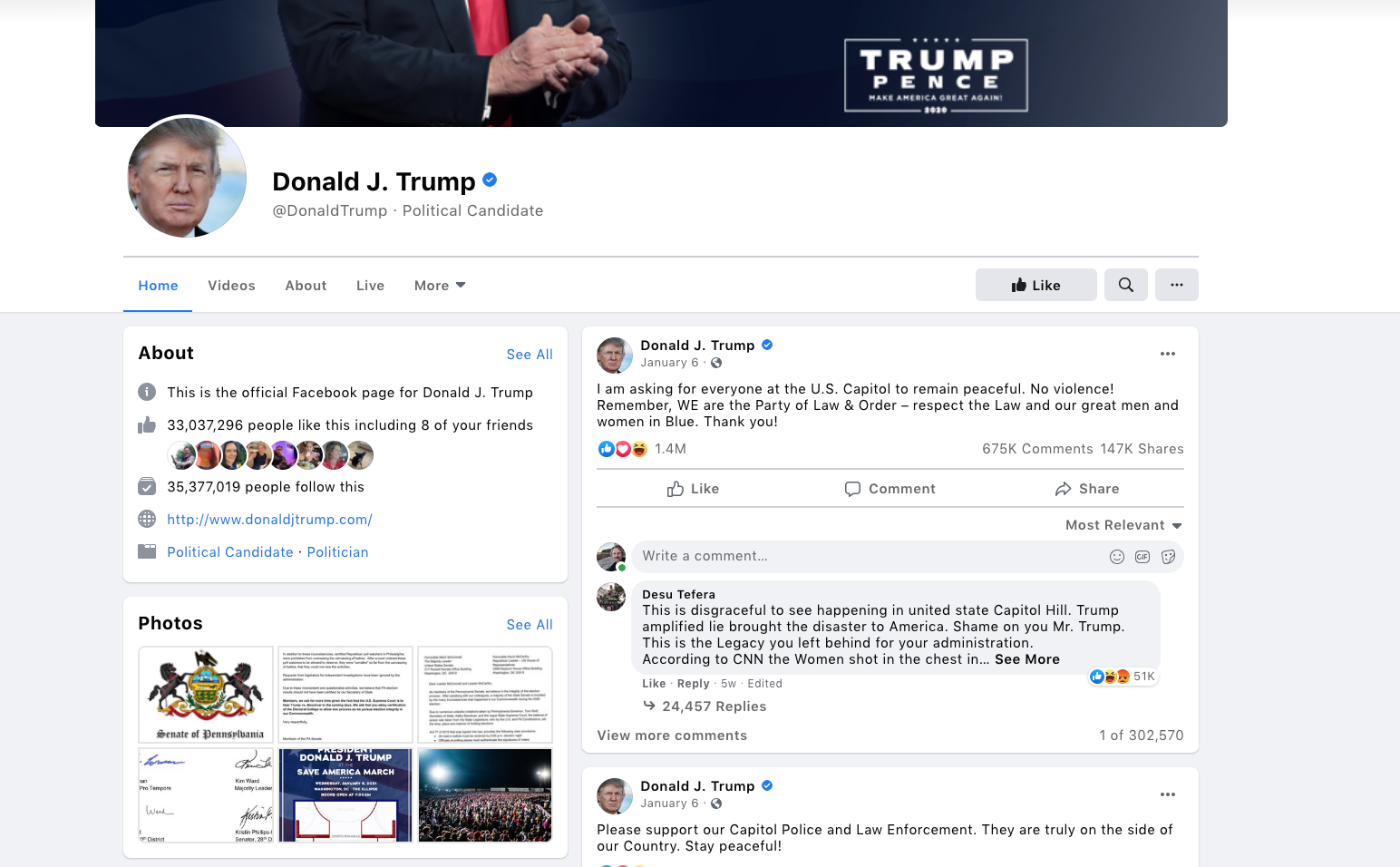

WITNESS submitted the following comment to the Facebook Oversight Board on their consideration of the suspension of former President Trump from Facebook and Instagram.

For further discussion on these issues see our recent post: Truth, Lies and Social Media Accountability in 2021: A WITNESS Perspective on Key Priorities

Summary

WITNESS, an international human rights organization helping people use video and technology notes:

*All of our comments are in the light of the fact that the powers to push Facebook on policy change, on product/technical infrastructure change, on global resourcing and on Facebook’s response to extralegal political pressures globally have not been granted to Oversight Board.

*Public figures need greater scrutiny, not less. Account suspension was correct.

*Public interest exceptions should apply to vulnerable speakers, not those in power with speech options

*Preservation of critical speech and content can be achieved via evidence lockers

*Off-platform context and dangerous speech principles are critical to making decision, not optional

*Facebook’s rules are not clear to ordinary people: they suffer from inconsistency in application, bias and lack of appeal.

*Global enforcement requires: far greater contextual understanding, including beyond majority elites as well as resourcing to moderation/for civil society globally and support to content moderation workers. It requires insulation from domestic extralegal pressures that compromise Facebook in countries around world.

Our Submission

WITNESS (witness.org) is an international human rights organization that helps people use video and technology to promote and defend human rights notes. We work with human rights organizations, social movements and individual witnesses in over 100 countries who engage in human rights-based activity on Facebook’s platforms, and who face threats from abuse of Facebook’s platforms.

Below we address questions raised by the Oversight Board.

However we first emphasize that to create an equitable, transparent and human rights-centered approach to content moderation requires power that has not been granted to the Oversight Board. To fully confront these questions requires from Facebook: a) A commitment to changes in overall policy b) Direct input from this decision-making into both product development and underlying technical infrastructure including algorithms c) A far more significant human and technical resourcing of/and attention to countries outside the US and Europe and to the needs, demands and harms to vulnerable populations in those countries and the US and Europe d) A concerted effort to insulate country-level Facebook staff and country-level decision-making from political influence and illegitimate government pressure.

The OB asks: If Facebook’s decision to suspend President Trump’s accounts for an indefinite period complied with the company’s responsibilities to respect freedom of expression and human rights, if alternative measures should have been taken, and what measures should be taken for these accounts going forward: More often than not, world leaders who incite violence and hatred online (and share harmful misinformation and disinformation) get away with it for too long. Human rights activists have consistently documented this in a range of global contexts, noting situations involving leaders in the USA, Brazil, India, and the Philippines. A decision to suspend former President Trump’s account is too late, not too early, as it was with other world leaders – e.g Senior General Min Aung Hlaing in Myanmar. However, a clear, consistent, transparent process for providing warnings, for appropriately applying earlier temporary account suspensions or content removals, and ultimately for permanently suspending accounts — all with right of appeal — is important.

The OB asks: How Facebook should treat the expression of political candidates, office holders, and former office holders, considering their varying positions of power, the importance of political opposition, and the public’s right to information: Facebook’s explicit provision of a newsworthiness for all politicians’ speech has provided cover for leaders to share false information or incite hate and for Facebook to act inconsistently. When it comes to incitement to hate, or sharing of harmful misinformation (for example on COVID-19), leaders should be subject to greater scrutiny when they push boundaries on platforms, not less. Newsworthiness exceptions and related public interest protections for posts or speakers do have a place… in protecting critical evidence of rights violations and vulnerable speakers within the public sphere rather than leaders who have other options for public speech, and who have generally been given ‘the benefit of the doubt’. Considerations of protecting important information from an archival perspective can be fulfilled by preserving content that has been shared on the platform but not making it public via evidence lockers.

The OB asks: How Facebook should assess off-Facebook context in enforcing its Community Standards, particularly where Facebook seeks to determine whether content may incite violence: Facebook should assess off-platform context if the genuine purpose of intervention is to prevent violence rather than provide policy loopholes for politicians to jump through, and if the company is legitimately trying to enforce standards in accordance with human rights standards. This off-platform context provides information to help ascertain and be clear on the real-world impact of online speech, and whether this impact justifies curtailing that speech. This must be complemented with real-world resourcing and responsiveness to civil society globally, particularly of groups vulnerable to dangerous speech from a politician. The Dangerous Speech project provides excellent guidance on this approach.

The OB asks: The accessibility of Facebook’s rules for account-level enforcement (e.g. disabling accounts or account functions) and appeals against that enforcement: Facebook’s rules are not clear for ordinary people. For a decade WITNESS’s partners and human rights defenders around the world have complained about take-downs of accounts and content without clarity or with apparent bias. Facebook should be transparent about how decisions are made for both for leaders and ordinary users, and hew to human rights principles of proportionality, legitimacy and specificity rather than over-broad, inconsistent deplatforming. The Santa Clara Principles for content moderation and the recommendations of Professor David Kaye, the former UN Special Rapporteur on Freedom of Expression and Opinion in 2018 provide clear roadmaps, generally accepted within the human rights community for how to do this.

The OB asks: Considerations for the consistent global enforcement of Facebook’s content policies against political leaders, whether at the content-level (e.g. content removal) or account-level (e.g. disabling account functions), including the relevance of Facebook’s “newsworthiness” exemption and Facebook’s human rights responsibilities: Consistent global enforcement is essential. This must be adequately resourced and with worker protections for vulnerable content moderation workers subject to trauma (see work of Professor Sarah Roberts). It must be done with a clear understanding of language and cultural context that is informed not only by majority elites in countries, but also by diversity and representation of historically marginalized communities in particular countries. Facebook must quickly act when policy decisions in particular countries are impacted by domestic political pressures outside of law or platform rules. Facebook must invest more money in content moderation and more resources in supporting global civil society advocates and entities who act as watchdogs. Otherwise rules will be applied consistently and reinforce trends to US and European exceptionalism in terms of content policy.

A newsworthiness exception should be far more applicable to protecting critical evidence of rights violations and vulnerable speakers within the public sphere, rather than leaders who have other options for public speech, and who have generally been given ‘benefit of the doubt’. Considerations of protecting important information from an archival perspective can be fulfilled by preserving content that has been shared on the platform but not making it public; these “evidence lockers” provide access to critical information for accountability purposes under privacy-preserving conditions.